Cyber Security Awareness Month - Day 30 - DSD 35 mitigating controls

Nearing the end of the month it would be remiss not to mention the DSD 35 mitigating strategies. Whilst not strictly a standard it provides guidance and The Defence Signals Directorate or DSD is an Australian government body that deals with many things called Cyber. Amongst other things they are responsible for providing guidance to Australian Government agencies and have produced the Information Security Manual (ISM) for years.

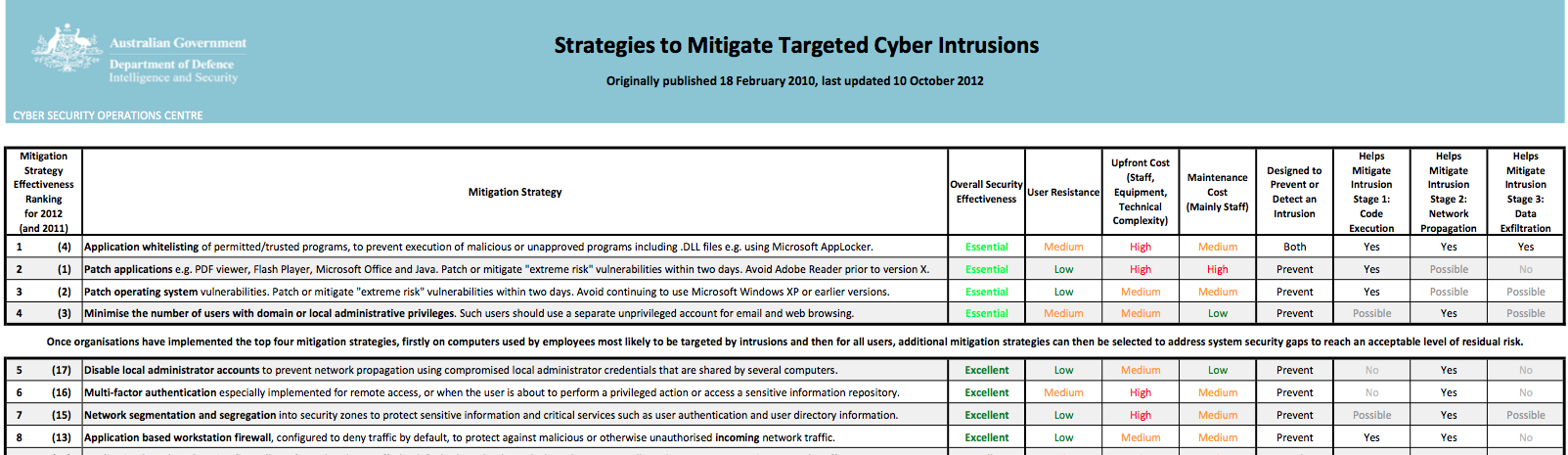

In the past few years they have expanded on this and produced the DSD 35 mitigating strategies. The DSD 35 mitigating strategies are based on examination of intrusions in government systems and have been developed to address the main issues that would have prevented the breach in the first place. In fact DSD states that by implementing just the top 4 strategies at least 85% of the intrusions would have been prevented.

The top four are:

- Application whitelisting

- Patch Applications

- Patch operating system vulnerabilities

- Minimise the number of users with domain or local administrative privileges.

Implementing the top 4 (Some general hints anyway)

Application whitelisting

Application whitelisting is one of the more effective methods of preventing malware from executing and therefore the attack from being effective. The main argument you will hear against this is that application whitelisting is difficult to achieve, which in a sense is correct. It will take effort to get the environment to the point where everything functions as it should. However in the whitespace following the top 4 is a good piece of advice form DSD "Once organisations have implemented the top four mitigation strategies, firstly on computers used by employees most likely to be targeted by intrusions and then for all users, additional mitigation strategies can then be selected to address system security gaps to reach an acceptable level of residual risk." In other words address the high risk users and issues first and then propagate the control to the remainder of the organisation.

There are a number of tools available that will implement application whitelisting and the initial prolong of systems in order to get the whitelisting right. A number of end point products are also capable of enforcing it and of course app locker in windows can also do the job. When implementing it, make sure you do this in test environments first to sort out the issues.

Patch Applications

Patching applications is something that we all should be doing, but can be difficult to achieve. One issue that I come across is "the vendor won't let us". Providers of certain applications, usually expensive, will not allow the environment to be changed. If you patch the operating system or supporting products they'll not provide support. In one extreme case I'm aware of the vendor of the product insisted the operating system was reverted back to XP SP2 before they would provide support. Those situations are difficult to resolve and unfortunately I can't help you out there. However going forward it may be an idea to make sure that support contracts allow for the operating system and other supporting products to be patched without penalty. As a minimum identify what really can't change and what can.

So outside of those applications that are just too hard, implement a process that patches applications that can be patched, maybe remove those that are really not needed. For those applications that are to hard, you will have to find some other controls that help you reduce the risk to them, possibly strategy one?

Patch operating system vulnerabilities

Many organisations have this sorted reasonably well. A number of operating system provide a relatively robust process to update operating system components. One of the clients I work with does the following which works for them. When there is an advanced notification the bulletin is analysed. A determination is made whether the patch needs to be applied and how quickly. Once they are released they are implemented in the DEV environment straight away and systems are tested, assuming they do not break anything the patches are implemented in UAT and other non production environments. Systems are tested again (A standard testing process mostly automated). Production implementations are scheduled for a Sunday/Monday implementation. Assuming there are no issues stopping the implementation everything is patched by Monday morning. It takes effort, but with some automation the impact can be reduced. There are also a number of products on the market that will assist in the patching processes, simplifying life.

Minimise the number of users with domain or local administrative privileges.

Removing admin rights will also take a little bit of effort. Identify those that have administrative rights, domain or local. Identify what functions or roles they actually perform that require those full rights. Take local admin rights as an example. There are some applications that really do require the user to have local administrative rights. However there are also plenty that "need" them for the sake of convenience, rather than figuring out what access is really needed admin rights are given. Some applications you come across need admin right the first time they are run, after that no more. Your objective should be to remove all local admin rights from users and reduce domain administrative rights to as few as possible accounts in the environment.

You will need to test before implementing in production.

If it all seems overwhelming break it down into smaller jobs. Do those devices that are critical to the organisation first and then expand the efforts. But once done you will have reduced risk to the organisation and you can start looking at implementing the remaining 31 controls. As I said at the start not necessarily a standard, but how often can you say that you know of a way to reduce risk of targeted attacks by 85% or more?

I'm interested in finding out how you may have implemented one or more of the top 4 controls, please share by commenting, or let us know via the contact form and I'll add contributions later in the week.

Mark H - Shearwater

http://www.dsd.gov.au/infosec/top35mitigationstrategies.htm

Hurricane Sandy Update

Last nights storm cut power to millions of households across much of the north east of the US and parts of Canada. The outages affect major population centers, including New York City.

At this point, the damage to infrastructure appears to be substantial and recovery may take days to weeks.

We have not heard of any outages of east coast services like amazon's cloud or google web services hosted in the area. We will try to keep you updated as we hear about any larger outages, but right now, there are only some individual web sites affected. This may change if power outages persist.

If you reside in the effected area, you are probably best off staying at home. Many roads are blocked by debris and in some cases by downed power lines.

Here are some of the typical issues we see after an event like this:

- outages of communications networks as batteries and generator fuel supplies run out.

- malware using the disaster as a ruse to get people to install the malicious software ("watch this video of the flooding")

- various scams trying to take advantage of disaster victims.

A couple ways how the internet can help in a disaster like this:

- many power companies offer web pages to report and monitor outages.

- FEMA offers updates on it's "ready.gov" and "disasterassistance.gov" web sites.

- local governments offer mobile applications to keep residents informed.

Twitter can provide very fast and localized updates, but beware that twitter is also used to spread misinformation.

A lot has been made of tweets that suggest organized looting. The posts I have seen appear to be meant as a joke if read with other tweets by the same person. In some cases the person doesn't live in the area, or the account is very new. Remember it is hard to detect irony in 140 characters.

We hope everybody in the effected area will stay save. The storm is still on going and internet outages are probably the least significant issue right now.

------

Johannes B. Ullrich, Ph.D.

SANS Technology Institute

Twitter

Comments